Summary

Optoacoustic imaging is a new, real-time feedback and non-invasive imaging tool with increasing application in clinical and pre-clinical settings. Studies using optoacoustic imaging have already discovered its unique capabilities as a diagnostic tool for breast cancer, Crohn’s disease, atherosclerotic carotid plaques, and skin cancer. The DLBIRHOUI project tackles some of the major challenges in optoacoustic imaging to facilitate faster adoption of the technology for clinical use through developed data-driven methodologies and to increase research in the field via massive experimental datasets that are being released.

Introduction

Optoacoustic (OA) imaging is a relatively new imaging technique that has complementary contrast to existing imaging technologies such as ultrasound. However, several challenges remain that are preventing OA from wider clinical adoption. Currently, customized hardware and imaging setup design are required to obtain high-quality OA images. As a tomographic imaging modality with limited tissue penetration, OA imaging suffers from limited view-associated challenges. Furthermore, various physical parameters relating to the imaged medium, such as speed of sound, are oversimplified during image reconstruction, leading to poor results and reducing the reliability of analytical techniques such as spectral unmixing.

DLBIRHOUI is a collaboration between ETH Zurich and the Swiss Data Science Center (SDSC). It has procured a first-of-its-kind collection of large, well-organized OA datasets. Furthermore, DLBIRHOUI proposed novel methodologies to overcome some of the major challenges in OA through both raw acquired signals and tomographic reconstructions.

A glimpse of challenges and solutions

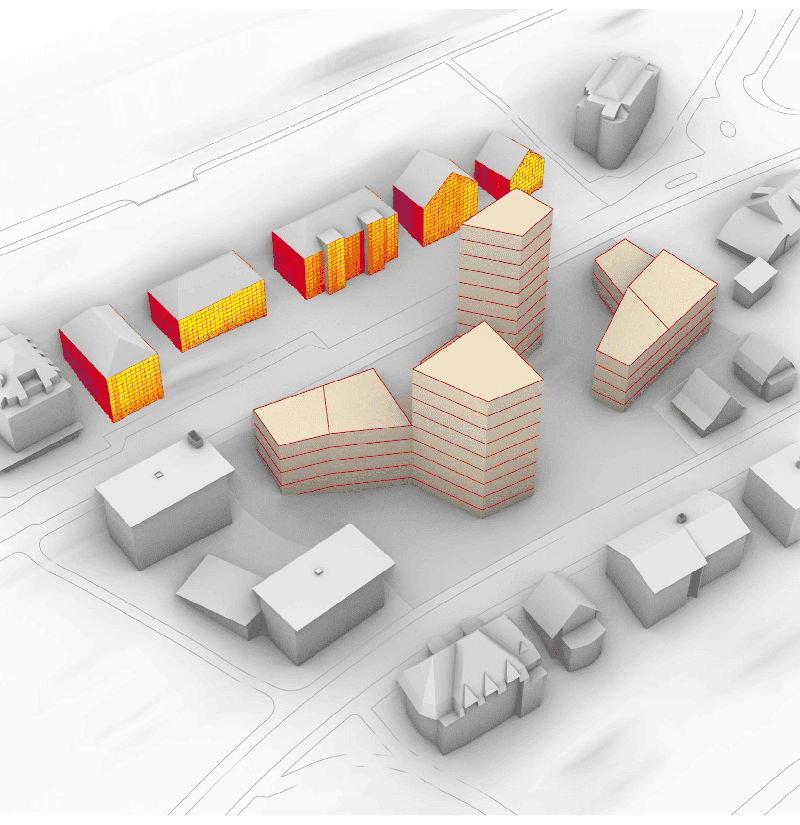

Despite its vast potential, OA is a challenging field of research due to the know-how and customized hardware necessary to set up and conduct high-quality experiments. This is further exacerbated by the lack of publicly available OA data resulting in a limited number of groups that can actively research OA globally and reducing the ability to fairly compare findings across existing literature. Using our large data expertise, we procured a large OA dataset (OADAT) with an unprecedented size of 2 million instances for the community . This dataset contains samples from both volunteers and simulated data collected across multiple transducer arrays and acquisition settings in order to capture a diverse dataset to explore the many challenges in the OA imaging field, shown in Figure 1. Using these datasets, we can trivially generate further synthetic experimental images (Figure 2).

Figure 2: Feel free to explore our online app and generate experimental images using our trained model for linear array (left) and semi-circle array (right).

Development of easy-to-use, open source, and stable Python packages is crucial for reproducibility and wide adoption of the imaging systems. We, therefore, developed open-source image reconstruction packages for OA (pyoat) and US (pyruct) systems in Python during the project. The “pyoat” package contains back-projection and model-based image reconstruction algorithms. The “pyruct” package includes delay and sum beamformer for reflection ultrasound computed tomography (RUCT). These packages assist users in integrating reconstruction algorithms into their deep learning pipelines using the available frameworks in Python .

Hybridization of OA systems with other imaging modalities, such as US provide complementary information when assessing several biological conditions. Alternatively, the successful combination of magnetic resonance imaging (MRI) with OA can boost the anatomical, functional and molecular information that can be acquired in hybrid settings. However, the implementation of such systems requires the registration of images from these two modalities. To do so, deep learning methods were implemented, successfully registering MRI and OA images during the project .

Limited view associated challenges constitute a significant obstacle in OA imaging. Often, linear ultrasound arrays are coupled with lasers to acquire both optoacoustic and ultrasound images, allowing complementary contrast from both modalities (in Figure 3, for example). However, as visible in Figure 1 bottom right, the limited angular coverage of a linear array leads to distorted geometries as well as a plethora of undesired artifacts. Using paired data from our dataset, OADAT, we can optimize a data-driven method for correcting both the distorted geometries and reducing the undesired artifacts (Figure 4).

Going one step further, we also implemented a generative modeling approach that can be optimized for an image-to-image translation without the use of paired data. This is a crucial benefit that alleviates many of the feasibility problems when acquiring unpaired OA images between different kinds of transducer arrays and settings. Figure 5 provides an example where a corresponding increased angular view from an experimental array still has more artifacts than the neural network generated image. Furthermore, we can also synthesize realistic experimental images from mere simulations, as shown in Figure 6. This is particularly interesting for procuring data with controlled scenes (e.g., generating digital twins).

generated using our generative model.

Direct unpaired image-to-image translation can sometimes be unpredictable due to the black-box nature of deep learning (DL) models. Accordingly, we also developed a two-step approach to overcome the limited view problem in OA using raw OA signals. Specifically, in the first step, a DL model is optimized to find mapping into a common embedding space to encode to, and decode from for a given experimental limited view data and simulated data of matching geometry, hence limited view challenges. In a second step, a separate DL model is optimized to in-paint missing channel information for the simulated data removing the limited view challenges as shown in Figure 7. Different from other work, this approach completely removes the need to acquire any form of experimental data without limited view challenges.

Conclusion

The methods and datasets developed throughout the DLBIRHOUI project undoubtedly brought both novel insights to the growing field of data-driven optoacoustics and a large volume of multimodal experimental and synthetic data. We tackled numerous challenges in OA through multiple methodologies using neural networks. The dataset we procured throughout the project will hopefully serve as a benchmark for the various global research groups allowing them to evaluate their methodologies and spark additional interest from other researchers with limited or no access to the customized hardware needed in the field of OA.

Co-authors

- Berkan Lafci, ETH Zurich*

- Anna Klimovskaia Susmelj, SDSC*

- Xose Luis Dean-Ben, ETH Zurich*

- Daniel Razansky, ETH Zurich*

- Fernando Perez-Cruz, SDSC

* Affiliations throughout the DLBIRHOUI project

Acknowledgments

The author would like to thank Berkan Lafci, Anna Klimovskaia Susmelj, Xose Luis Dean-Ben, and Daniel Razansky.

References

- Lafci, Berkan, Firat Ozdemir, Xosé Luís Deán-Ben, Daniel Razansky, and Fernando Perez-Cruz. "OADAT: Experimental and Synthetic Clinical Optoacoustic Data for Standardized Image Processing." arXiv preprint arXiv:2206.08612 (2022).

- Reference paper for pyruct:

B. Lafci, J. Robin, X. L. Deán-Ben and D. Razansky, "Expediting Image Acquisition in Reflection Ultrasound Computed Tomography," in IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, vol. 69, no. 10, pp. 2837-2848, Oct. 2022, doi: 10.1109/TUFFC.2022.3172713. - Merčep, X. L. Deán-Ben and D. Razansky, ”Imaging of blood flow and oxygen state with a multi-segment optoacoustic ultrasound array”, Photoacoustics, 10, 48–53, 2018.

- Klimovskaia, A., Lafci, B., Ozdemir, F., Davoudi, N., Dean-Ben, X. L., Perez-Cruz, F., & Razansky, D. (2021, December). Signal Domain Learning Approach for Optoacoustic Image Reconstruction from Limited View Data. In Medical Imaging with Deep Learning.

- Reference paper for MRI and OA registration:

Yexing Hu, Berkan Lafci, Artur Luzgin, Hao Wang, Jan Klohs, Xose Luis Dean-Ben, Ruiqing Ni, Daniel Razansky, and Wuwei Ren, "Deep learning facilitates fully automated brain image registration of optoacoustic tomography and magnetic resonance imaging," Biomed. Opt. Express 13, 4817-4833 (2022) - Cover Image courtesy of Elena Mercep: Transmission–reflection optoacoustic ultrasound (TROPUS) computed tomography of small animals , E Merčep, JL Herraiz, XL Deán-Ben, D Razansky, Light: Science & Applications 8 (1), 18, 2019