In this work, Swiss Data Science Center researchers Steven Stalder, Nathanaël Perraudin, Radhakrishna Achanta, Fernando Perez-Cruz, and Michele Volpi tackle a fundamental problem in modern Artificial Intelligence (AI) and Machine Learning (ML): the lack of transparency of black-box models. Specifically, they focus on how to unbox deep learning models for image classification problems.

Attribution in image recognition systems

One can train AI models to predict which object class – a dog, a cat, a bike, a person, etc. – is present in a given image. Although these models are highly accurate at this task, they do not provide any additional information on which portions of the image they relied on to arrive at their prediction. While humans can easily explain which part of an image contains a class of interest, it is challenging to understand the behavior of deep learning models due to their black-box nature. In computer vision, identifying the regions of an image that are responsible for a given prediction is called attribution.

Our contribution

We present an attribution method making use of a model, which we call the Explainer. The Explainer is a deep learning model trained to explain the output of a target model, which is an independently pre-trained image classifier. The innovation lies in the ability of the Explainer to directly provide explanations for all classes, without needing to access the trained classifier’s internals, nor having to retrain any parameters for new images. This makes our proposed method a flexible tool to be used with a wide range of classifiers and datasets.

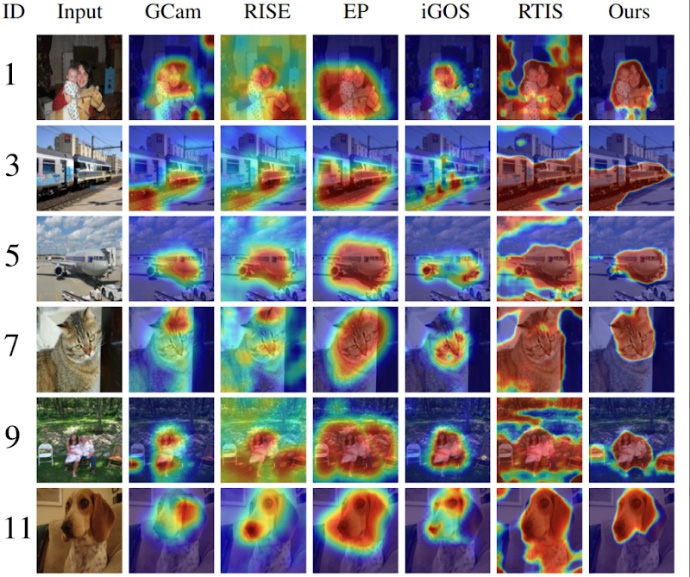

Our contribution shows significant improvements over other attribution methods on two common computer vision benchmarks. Most importantly, we demonstrate that the Explainer is more accurate in localizing salient image portions. Additionally, the Explainer is significantly more computationally efficient than most of the competing methods. A single forward pass through the Explainer directly provides accurate explanations for all possible object classes from the dataset – as shown in Fig. 1 and 2 – in less than a second.

The relevance of attribution and explainability of AI

Attribution techniques are an essential step toward the widespread adoption of AI in fields where providing a correct prediction alone is insufficient. In many application domains, users need to trust the models and the inferences provided by the AI. An obvious approach toward increasing trust in AI systems, is to understand why such models make a decision. Why is the model predicting the presence of a tumor in this scan? Why does the model confidently recognize this object class over these other ones? Besides these critical questions, our proposed model also provides easy insights into the mistakes a model makes. In this way, the Explainer highlights biases and errors in datasets used to train image recognition systems, and helps AI makers to develop fair (unbiased) AI systems.

Understanding the datasets and the complications they come with, as well as tools to see why complex models provide such decisions, is only a first step toward inherently interpretable models. In the future, developers of ML models should make an effort to not only achieve the best possible prediction accuracies but also provide ways to directly make their model’s decisions analyzable for non-expert human users. Once again, this approach will be especially critical in domains like medicine, the legal system, or other areas where trust in a model’s decisions is indispensable.

To go further

- Our paper was accepted at the Thirty-Sixth Conference on Neural Information Processing Systems (NeurIPS 2022). You can already have a look at it on arXiv: https://arxiv.org/abs/2205.11266.

- Stalder, S., Perraudin, N., Achanta, R., Perez-Cruz, F., & Volpi, M. (2022). What You See is What You Classify: Black Box Attributions. arXiv preprint arXiv:2205.11266.

- The code for the project is available at https://github.com/stevenstalder/NN-Explainer.

References

- M. Everingham, S. M. A. Eslami, L. Van Gool, C. K. I. Williams, J. Winn, and A. Zisserman. The Pascal visual object classes challenge: A retrospective. International Journal of Computer Vision, 111(1):98–136, Jan. 2015.

- R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In IEEE/CVF International Conference on Computer Vision, pages 618–626, 2017.

- R. C. Fong, M. Patrick, and A. Vedaldi. Understanding deep networks via extremal perturbations and smooth masks. In IEEE/CVF International Conference on Computer Vision, pages 2950–2958, 2019.

- V. Petsiuk, A. Das, and K. Saenko. Rise: Randomized input sampling for explanation of black-box models. In British Machine Vision Conference, 2018.

- S. Khorram, T. Lawson, and L. Fuxin. iGOS++: Integrated gradient optimized saliency by bilateral perturbations. In Proceedings of the Conference on Health, Inference, and Learning, CHIL ’21, page 174–182, New York, NY, USA, 2021. Association for Computing Machinery. ISBN 9781450383592. doi: 10.1145/3450439.3451865. URL https://doi.org/10.1145/3450439.3451865.

- P. Dabkowski and Y. Gal. Real time image saliency for black box classifiers. In Advances in Neural Information Processing Systems, pages 6967–6976, 2017.