PACMAN LHC

Particle Accelerators and Machine Learning

Abstract

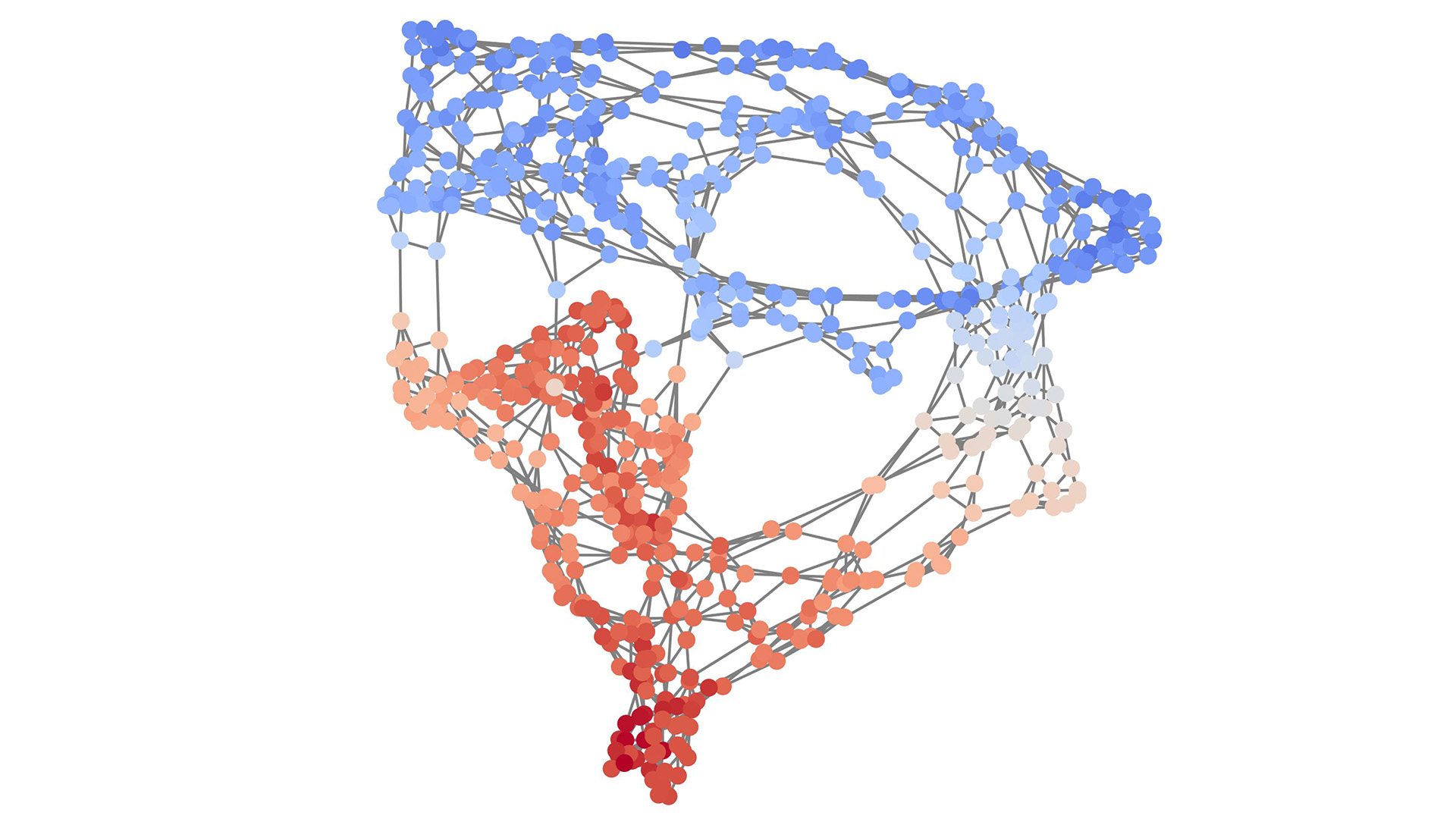

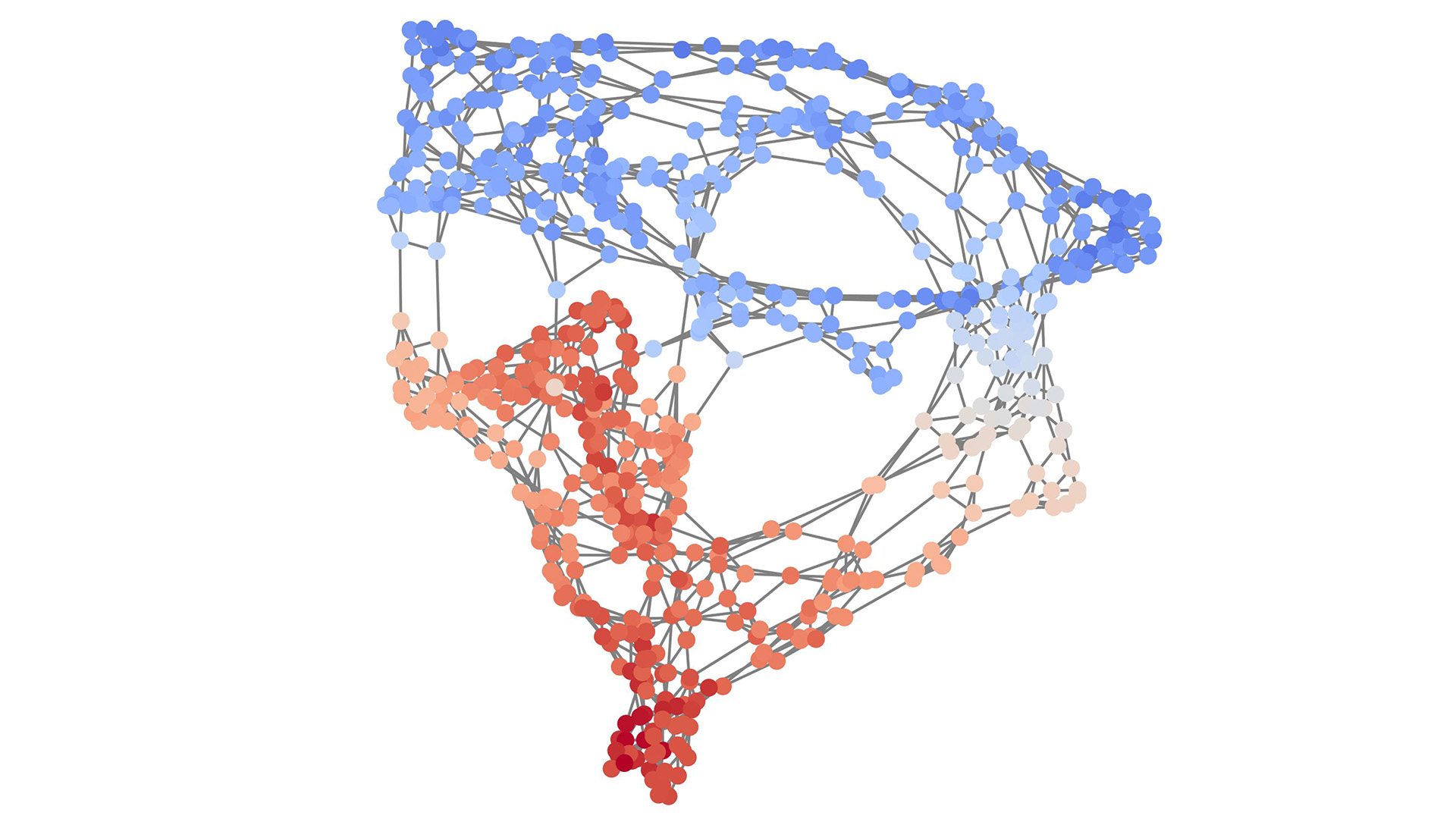

Particle accelerator facilities have a wide range of operational needs when it comes to tuning, optimisation, and control. At the Large Hadron Collider (LHC) at CERN reducing the risks related to the high beam power by reducing the beam losses will lead to increase in particle collision rates and a deeperunderstanding of the physics mechanisms. In order to meet these sorts of demands, particle accelerators rely on interactions with control systems, on fine-tuning of machine settings by operators, online optimisation routines, and on databases of previous settings that were known to be optimal for some desired operating condition. We aim to bring Machine Learning (ML) to particle accelerator operation, in order to increase the performance. Each of the mentioned operational needs have corresponding ML-based approaches that could be used to supplement the existing workflows. In addition, new HL-LHC and FCC designs will be proposed based on the LHC findings and prepare for more effective novel FCC operation.

People

Collaborators

Ekaterina received her PhD in Computer Science from Moscow Institute for Physics and Technology, Russia. Afterwards, she worked as a researcher at the Institute for Information Transmission Problems in Moscow and later as a postdoctoral researcher in the Stochastic Group at the Faculty of Mathematics at University Duisburg-Essen, Germany. She has experience with various applied projects on signal processing, predictive modelling, macroeconomic modelling and forecasting, and social network analysis. She joined the SDSC in November 2019. Her interests include machine learning, non-parametric statistical estimation, structural adaptive inference, and Bayesian modelling.

Guillaume Obozinski graduated with a PhD in Statistics from UC Berkeley in 2009. He did his postdoc and held until 2012 a researcher position in the Willow and Sierra teams at INRIA and Ecole Normale Supérieure in Paris. He was then Research Faculty at Ecole des Ponts ParisTech until 2018. Guillaume has broad interests in statistics and machine learning and worked over time on sparse modeling, optimization for large scale learning, graphical models, relational learning and semantic embeddings, with applications in various domains from computational biology to computer vision.

PI | Partners:

description

Goal:

Minimise beam losses, better control of accelerator parameters, prevent unnecessary machine interruptions.

Impact:

We are aiming to implement the paradigm of digital twins, i.e. a virtual representation of the real world accelerator. At the same time, this could create new virtual and augmented reality opportunities, which will certainly be a big theme in the implementation of the future FCC.

Proposed approach:

We propose to gather massive amount of accelerator data in collaboration with the LHC Operation groups to evaluate automatic and semi-automatic ways to optimise and steer the overall collider set-up and define the strategy for the operational aspects of the future projects (i.e. HL-LHC and FCC). In parallel to operational data accumulated during the physics runs, time will be devoted for machine development studies for testing the robustness of the developed models used for an automatised optimisation of the collider performances In dedicated experiments we will request the trained model to predict and set new parameters to improve the beam lifetimes in the LHC. Depending on the results obtained a continuation of the study and the extension of the models to other accelerators of the CERN complex and to future machine (HL-LHC) will be a natural path for a continuation of the collaboration.

Presentation

Gallery

Annexe

Additionnal resources

Bibliography

- G. Apollinari et al. (including T. Pieloni) “High-Luminosity Large Hadron Collider (HL- LHC): Preliminary Design Report – Chapter 2: Machine Layout and Performances” Preliminary Design Report

- L. Coyle, “Machine learning applications for hadron colliders: LHC lifetime optimization and designing Future Circular Colliders”, presented at the 2018 Swiss Physics Society Meeting at EPFL Annual meeting of the Swiss Physical Society 2018 2752252/SPS_talk.pdf

Publications

Related Pages

More projects

CLIMIS4AVAL

News

Latest news

The Promise of AI in Pharmaceutical Manufacturing

The Promise of AI in Pharmaceutical Manufacturing

Efficient and scalable graph generation through iterative local expansion

Efficient and scalable graph generation through iterative local expansion

RAvaFcast | Automating regional avalanche danger prediction in Switzerland

RAvaFcast | Automating regional avalanche danger prediction in Switzerland

Contact us

Let’s talk Data Science

Do you need our services or expertise?

Contact us for your next Data Science project!